While we usually see robotics applied to industrial or research applications, there are plenty of ways they could help in everyday life as well: an autonomous guide for blind people, for instance, or a kitchen bot that helps disabled folks cook. Or — and this one is real — a robot arm that can perform rudimentary sign language.

It’s part of a masters thesis from grad students at the University of Antwerp who wanted to address the needs of the deaf and hearing impaired. In classrooms, courts and at home, these people often need interpreters — who aren’t always available.

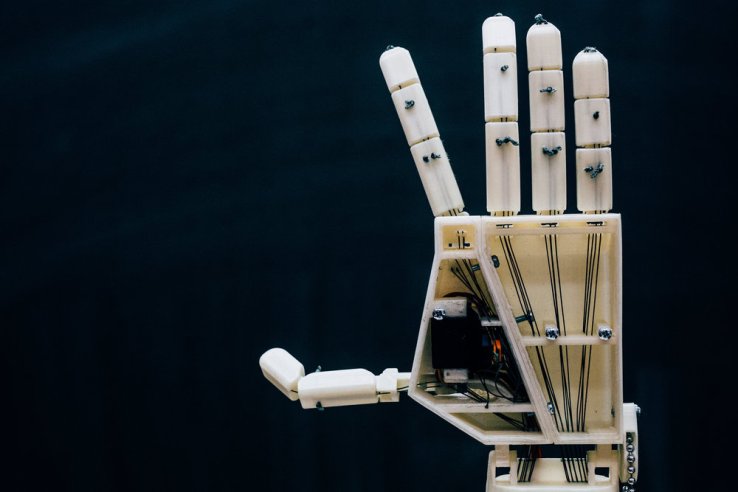

Their solution is “Antwerp’s Sign Language Actuating Node,” or ASLAN[1]. It’s a robotic hand and forearm that can perform sign language letters and numbers. It was designed from scratch and built from 25 3D-printed parts, with 16 servos controlled by an Arduino board. It’s taught gestures using a special glove, and the team is looking into recognizing them through a webcam as well.

Right now, it’s just the one hand — so obviously two-hand gestures and the cues from facial expressions that enrich sign language aren’t possible yet. But a second coordinating hand and an emotive robotic face are the next two projects the team aims to tackle.

Right now, it’s just the one hand — so obviously two-hand gestures and the cues from facial expressions that enrich sign language aren’t possible yet. But a second coordinating hand and an emotive robotic face are the next two projects the team aims to tackle.

The idea is not to replace interpreters, whose nuance can hardly be replicated, but to make sure that there is always an option for anyone worldwide who requires sign language service. It also could be used to help teach sign language — a robot doesn’t get tired of repeating a gesture for you to learn.

Why not just use a virtual hand? Good question. An app or even a speech-to-text program would accomplish many of the same things. But it’s...

Read more from our friends at TechCrunch