As robots and gadgets continue to pervade our everyday lives, they increasingly need to see in 3D — but as evidenced by the notch in your iPhone[1], depth-sensing cameras are still pretty bulky. A new approach inspired by how some spiders sense the distance to their prey could change that.

Jumping spiders don’t have room in their tiny, hairy heads for structured light projectors and all that kind of thing. Yet they have to see where they’re going and what they’re grabbing in order to be effective predators. How do they do it? As is usually the case with arthropods, in a super weird but interesting way.

Instead of having multiple eyes capturing a slightly different image and taking stereo cues from that, as we do, each of the spider’s eyes is in itself a depth-sensing system. Each eye is multi-layered, with transparent retinas seeing the image with different amounts of blur depending on distance. The differing blurs from different eyes and layers are compared in the spider’s small nervous system and produce an accurate distance measurement — using incredibly little in the way of “hardware.”

Researchers at Harvard have created a high-tech lens system that uses a similar approach[2], producing the ability to sense depth without traditional optical elements.

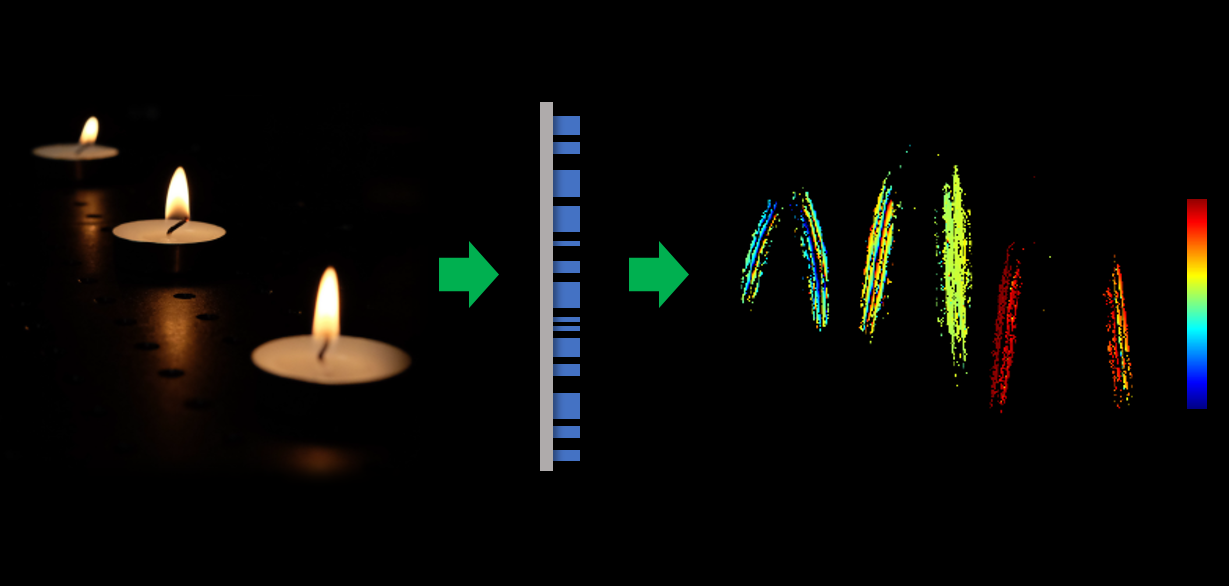

The “metalens” created by electrical engineering professor Federico Capasso and his team detects an incoming image as two similar ones with different amounts of blur, like the spider’s eye does. These images are compared using an algorithm also like the spider’s — at least in that it is very quick and efficient — and the result is a lovely little real-time, whole-image depth calculation.

The process is not only efficient, meaning it can be done...